Assessing And Implementing Clinical Trial Technologies: A 3-Step, Adaptive Approach

By Jeffrey S. Handen, Ph.D., Grant Thornton LLP

Though estimates vary, it is generally accepted that a significant and unacceptable percentage of IT projects fail. According to a 2017 report from the Project Management Institute, 14 percent of all IT projects were total failures; a remaining 31 percent didn’t meet their goals, while 43 percent were over budget and 49 percent exceeded timelines.1 According to a Gartner survey, 20 to 28 percent (depending on the size of the project) of all IT projects fail.2 And McKinsey’s data shows, on average, IT projects run 45 percent over budget while delivering 56 percent fewer benefits than predicted.3

With advances in technologies and digital innovations targeting the clinical research environment, in particular, evolving at dizzying rates, the need to efficiently assess and implement opportunities in the context of these high project attrition rates is greater than ever.

A recent study found that 64 percent of researchers have used digital health tools in their clinical trials, and 97 percent plan to use these tools in the next five years.4 Opportunities for digital tools in clinical research will only increase, and these include a myriad of technologies, such as:

- online data portals that track dosing;

- smartphone apps and/or the use of voice-activated technologies such as Amazon Echo and Apple Siri that encourage patient adherence and facilitate patient-doctor communications to reduce dropout and lost-to-follow up rates;

- electronic drug labeling;

- social platforms to facilitate recruitment and define the patient experience for input into protocol development;

- crowdsourcing for clinical trials recruitment;

- Big Data analytics driving risk-based monitoring;

- telemedicine, smartphones, and other wireless cloud-based technologies that promise the “site-less trial,” where endpoint data is collected without ever having to go to the doctor’s office;

- synthetic trial arms;

- semantic technologies to analyze unstructured data from scientific literature and social media to gain clinical insights;

- EMR/EHR data leveraged for feasibility assessments and recruitment;

- eSource;

- eConsent; and

- online PI training.

The challenge then becomes how to effectively and efficiently evaluate digital technologies in real-life clinical trials. Organizations must have a process for assessing quantitative and qualitative ROI without interfering with adherence to GCP. At the same time, the process must allow for measurement of potential efficiencies to justify the total cost of implementation — or overcoming the switching barrier. How do we bring the “fail early” into the already-existing “fail often” paradigm?

The answer is rapid prototyping in actual ongoing clinical trials with stakeholders, within an initial three-month period following implementation that allows for simultaneous evaluation of multiple digital technologies and/or multiple vendors. Evaluations must not interfere with adherence to GCP and all such relevant regulations that ensure human subject protection and clinical trial results reliability.

Any effective digital innovation must positively and significantly impact time, cost, quality, or some combination, in a measurable way, to justify the total cost of implementation — overcoming both the switching barrier and providing sufficient incremental value. In addition, organizations must consider certain qualitative dimensions like scalability of the technologies; compatibility with existing systems, procedures, and processes; investment required for retraining and SOP or other controlled documents creation/modification; and user experience.

Rapid prototyping entails a three-step adaptive approach: building a configurable model, virtual/conference room pilot, and interim data analysis.

1. Configuring The Model

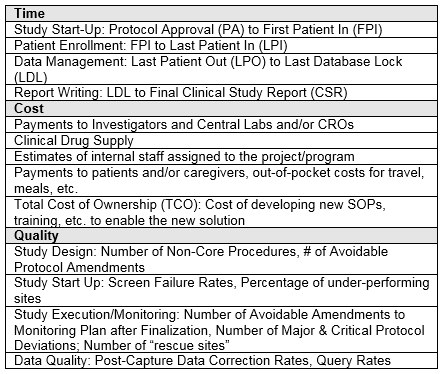

First, organizations must build a configurable financial and resourcing model of a representative study with the critical levers identified. At this point, they’ll develop phase- and therapeutic area-specific baseline configurations using benchmarking and/or expert input. They’ll define specific critical path levers of time, cost, and quality in advance; examples of these levers are shown in the table below. Levers are, by definition, critical path milestones/parameters.

2. Virtual Pilot

The next step in rapid prototyping is to assess potential digital technologies against the levers they could modify and for which the impact can be quantified. Critical to the evaluation of the various technologies is assessing them in an “apples to apples” manner. As such, organizations can compare multiple digital technologies against each other in a virtual study of their hypothetical impact even prior to implementation. To compare similar technologies from different vendors, organizations can segment the clinical study into different “digital technology treatment arms” in the virtual environment. With projected effects hypothesized, implementation then proceeds with relevant metrics and leading indicators collected during a three-month period.

3. Interim Data Analysis

Third, following the three-month evaluation period, organizations perform an interim data analysis to assess the efficacy of the various proposed digital technologies and quantify the actual vs. the proposed efficiencies. Additionally, we suggest a Net Promoter Score (NPS) analysis following the three-month evaluation period to assess qualitative impact from all relevant stakeholder groups. There are various ways to conduct qualitative assessments; however, NPS allows for an extremely rapid and simple readout. Combining the quantitative and qualitative responses provides for an “adaptive” response to drop any underperforming digital technologies or digital “arm” and switch the remainder of the trial to the proven digital technology.

Conclusion

This approach draws inspiration from backward chaining and goal directed project management (GDPM). Both methodologies start with the desired result (positive impact of time, cost, and/or quality) and work backward to identify critical path processes/activities, or the “levers,” in the proposed model. Backward chaining is an inference methodology that has been applied to areas as diverse as behavioral analysis for special education, occupational therapy, and AI. Growing out of the expert systems of the 1970s and used in logic programming such as Prolog, backward chaining at its simplest starts with the goal and works backward. GDPM, a project management methodology first introduced in the 1980s, has proven to be a very efficient approach in project planning, identifying associated key milestones and risks. Combining these straightforward approaches allows for constant line of sight into end-state quantifiable goals, requiring relatively simple inputs once the model is developed. The inputs are defined solely as the expected levers touched by a digital technology, the projected efficiencies, a single-question NPS survey following the three-month technology pilot implementation, and the increase in efficiencies achieved compared with projections.

This approach also does not require large, complex investments in clinical trial modeling and simulation software. It’s a rapid development and implementation framework that can produce outcomes in less than six weeks, followed by the three-month pilot technology implementation phase. Additionally, it allows for configurable baselining to sponsor specific TAs and/or indications and assessment of potential digital technology intervention anywhere along the study life cycle, since the entire study will be modeled – for example, Protocol Development and Study Planning through Study Conduct to Study Closeout and CSR Writing. Moreover, the NPS assessment offers the qualitative perspective of the user experience and behaviors of all stakeholders (e.g., patients, sites, sponsors), which can be factors in decision making.

References:

- PMI’s Pulse of the Profession, 9th Global Project Management Survey, 2017

- Garner Survey, Why Projects Fail, June 2012

- McKinsey and Company Survey, 2012

- Insights on Digital Health Technology Survey 2016, Vladic

About The Author:

Jeffrey S. Handen, Ph.D., is a director in the Life Sciences practice at Grant Thornton LLP and leader in R&D Transformation. He has published in multiple peer-reviewed and business journals, presented at numerous industry conferences and scientific meetings as an invited speaker, and served as past editor in chief of the Industrialization of Drug Discovery and Re-inventing Clinical Development compendiums. Handen has more than 20 years’ experience in pharmaceutical and biotechnology R&D, process re-engineering, and systems and process implementation. He is responsible for overseeing business process improvement and solution architecting for optimizing both clinical development and discovery. You can contact him at jeff.handen@us.gt.com.

Jeffrey S. Handen, Ph.D., is a director in the Life Sciences practice at Grant Thornton LLP and leader in R&D Transformation. He has published in multiple peer-reviewed and business journals, presented at numerous industry conferences and scientific meetings as an invited speaker, and served as past editor in chief of the Industrialization of Drug Discovery and Re-inventing Clinical Development compendiums. Handen has more than 20 years’ experience in pharmaceutical and biotechnology R&D, process re-engineering, and systems and process implementation. He is responsible for overseeing business process improvement and solution architecting for optimizing both clinical development and discovery. You can contact him at jeff.handen@us.gt.com.